A to Z of Excel Functions: The LOGEST Function

1 November 2021

Welcome back to our regular A to Z of Excel Functions blog. Today we look at the LOGEST function.

The LOGEST function

Sometimes, you wish to forecast what comes next in a sequence, i.e. make a forecast. There are various approaches you could use:

- Naïve method: this really does live up to its billing – you simply use the last number in the sequence, e.g. the continuation of the series 8, 17, 13, 15, 19, 14, … would be 14, 14, 14, 14, … Hmm, great

- Simple average: only a slightly better idea: here, you use the average of the historical series, e.g. for the continuation of the series 8, 17, 13, 15, 19, 14, … would be 14.3, 14.3, 14.3, 14.3, …

- Moving average: now we start to look at smoothing out the trends by taking the average of the last n items. For example, if n were 3, then the sequence continuation of 8, 17, 13, 15, 19, 14, … would be 16, 16.3, 15.4, 15.9, 15.9, …

- Weighted moving average: the criticism of the moving average is that older periods carry as much weighting as more recent periods, which is often not the case. Therefore, a weighted moving average is a moving average where within the sliding window values are given different weights, typically so that more recent points matter more. For example, instead of selecting a window size, it requires a list of weights (which should add up to 1). As an illustration, if we picked four periods and [0.1, 0.2, 0.3, 0.4] as weights, we would be giving 10%, 20%, 30% and 40% to the last 4 points respectively which would add up to 1 (which is what it would need to do to compute the average).

Therefore the continuation of the series 8, 17, 13, 15, 19, 14, … would be 15.6, 15.7, 15.7, 15.5, 15.6, …

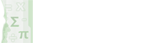

- Regression analysis: this is a technique where you plot an independent variable on the x (horizontal axis) against a dependent variable on the y (vertical) axis. “Independent” means a variable you may select (e.g. “June”, “Product A”) and dependent means the result of that choice or selection.

For example, if you plotted your observable data on a chart, it might look something like this:

There is a statistical technique where you may actually draw the “best straight line” through the data using an approach such as Ordinary Least Squares. Once you have worked it out, you can calculate the gradient (m) and where the line cuts the y axis (the y intercept, c). This gives you the equation of a straight line:

y = mx + c

Therefore, for any independent value x, the dependent value y may be calculated – and we can use this formula for forecasting. Of course, this technique looks for a straight line and is known as linear regression

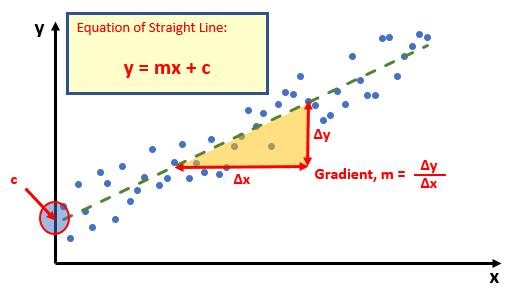

- Exponential curve fitting: if the relationship is not linear, a more suitable approach may be to calculate the best fit exponential curve:

The line may be defined by

y = bmx

or in more complex situations,

y = bm1x1m2x2…

if there are multiple x-values, where the dependent y-value is a function of the independent x-values. The m-values are bases corresponding to each exponent x-value, and b is a constant value. Note that y, x and m can be vectors. The array that LOGEST returns is {mn, mn-1, ..., m1, b}.

The LOGEST function employs the following syntax to operate:

LOGEST(known_y’s, [known_x’s], [constant], [statistics]).

The LOGEST function has the following arguments:

- known_y’s: this is required and represents the set of y-values that you already know in the relationship y = bmx (nothing to do with bicycles)

- known_x’s: this is optional and denotes the set of x-values that you may already know in the relationship y = bmx

- constant: this argument is optional and is a logical value specifying whether to force the constant b to equal one (1)

- statistics: this final argument is also optional. This too is a logical value specifying whether to return additional regression statistics (see below).

It should be further noted that:

- if the range of known_y's is either in a single column or else a single row, each column of known_x's is interpreted as a separate variable

- the range of known_x's may include one or more sets of variables. If only one variable is used, known_y's and known_x's can be ranges of any shape, as long as they have equal dimensions. If more than one variable is used, known_y's must be a vector (i.e. a range with a height of one row or a width of one column)

- if known_x's is omitted, it is assumed to be the array {1,2,3,...} that is the same size as known_y's

- if constant is TRUE or omitted, b is calculated normally

- if constant is FALSE, b is set equal to one (1) and the m-values are adjusted to fit y = mx

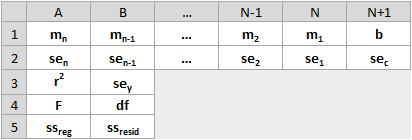

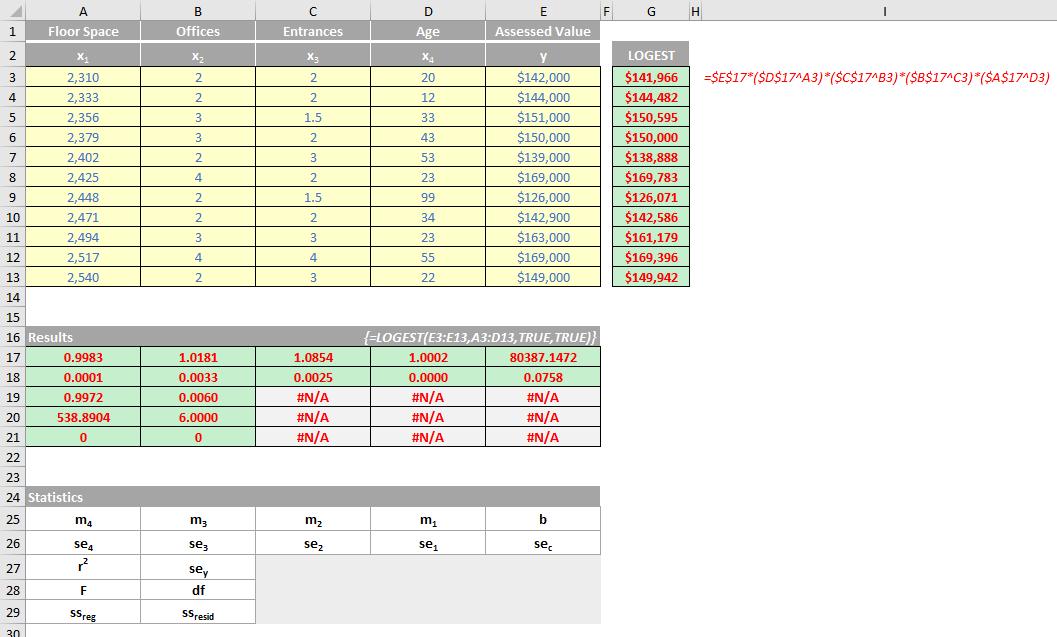

- if statistics is TRUE, LOGEST returns the additional regression statistics; as a result, the returned array is {mn, mn-1, ..., m1, c; sen, sen-1, ..., se1, sec; r2, sey; F, df; ssreg, ssresid}

- if statistics is FALSE or omitted, LOGEST returns only the m-coefficients and the constant b.

With regard to the additional regression statistics, these are produced in a grid (an array) as follows:

These statistics may be described as follows:

se1, se2, …, sen | Standard error values for the coefficients m1, m2, …, mn. The standard error is a measure of the statistical accuracy of an estimate, equal to the standard deviation of the theoretical distribution of a large population of such estimates. It is usually estimated in practice as the sample standard deviation divided by the square root of the sample size (assuming statistical independence of the values in the sample), |

| seb | Standard error value for the constant c (but this is equal to #N/A when constant is FALSE |

| r2 | This the coefficient of determination, which compares estimated and actual y-values and ranges, with a value between zero(0) and one (1). If it is 1, there is a perfect correlation in the sample, i.e. there is no difference between the estimated y-value and the actual y-value. At the other extreme, if the coefficient of determination is zero, the regression equation is not helpful in predicting a y-value. The coefficient of determination, R2, is the proportion of the variance in the dependent variable that is predictable from the independent variable(s). There are several definitions of R2, but they are not always equivalent (indeed, they can be negative on ocassion). One class of such cases includes that of simple linear regression where r2 is used instead of R2. When an intercept is included, then r2 is simply the square of the sample correlation coefficient (i.e. r) between the observed outcomes and the observed predictor values. Here, the coefficient of determination will range between zero (0) and one (1) |

| sey |

|

| F | This is the F statistic or the F-observed value. You should use the F statistic to determine whether the observed relationship between the dependent and independent variables occurs by chance This may be calculated using the F.INV.RT function in Excel. An F statistic is a value you get when you run an analysis of variance (ANOVA) test or a regression analysis to find out if the means between two populations are significantly different. It’s similar to a T statistic from a T-Test: a T-test will tell you if a single variable is statistically significant, whereas an F test will tell you if a group of variables are jointly significant |

| df | The degrees of freedom. You should use the degrees of freedom to help you find F-critical values in a statistical table. Compare the values you find in the table to the F statistic returned by LOGEST to determine a confidence level for the model. The degree(s) of freedom is the number of independent values or quantities which may be assigned to a statistical distribution. It is is calculated as follows. When no x columns are removed from the model due to collinearity:

In both cases, each x column that is removed due to collinearity increases the value of df by one (1) |

| ssreg | This is the regression sum of squares In regression analysis, Excel calculates for each point the squared difference between the y-value estimated for that point and its actual y-value. The sum of these squared differences is called the residual sum of squares, ssresid. Excel then calculates the total sum of squares, sstotal. When the constant argument = TRUE or is omitted, the total sum of squares is the sum of the squared differences between the actual y-values and the average of the y-values. When the constant argument = FALSE, the total sum of squares is the sum of the squares of the actual y-values (without subtracting the average y-value from each individual y-value). Then, the regression sum of squares, ssreg, may be found from: ssreg = sstotal - ssresid. The smaller the residual sum of squares is, compared with the total sum of squares, the larger the value of the coefficient of determination, r2, which is an indicator of how well the equation resulting from the regression analysis explains the relationship among the variables. The value of r2 equals ssreg/sstotal |

| ssresid | This is the residual sum of squares, as explained above. |

To be clear:

- the more a plot of your data resembles an exponential curve, the better the calculated line will fit your data. Like LINEST, LOGEST returns an array of values that describes a relationship among the values, but LINEST fits a straight line to your data; LOGEST fits an exponential curve

- When you have only one independent x-variable, you can obtain y-intercept (b) values directly by using the following formula:

y-intercept (b): INDEX(LOGEST(known_y's, known_x's), 2)

- You can use the y = bmx equation to predict future values of y, but Excel has a GROWTH function to do this for you

- When entering an array constant such as known_x's as an argument, use commas to separate values in the same row and semicolons to separate rows. Separator characters may be different depending upon your regional settings

- You should note that the y-values predicted by the regression equation may not be valid if they are outside the range of y-values you used to determine the equation.

Please see my comprehensive example below:

We’ll continue our A to Z of Excel Functions soon. Keep checking back – there’s a new blog post every business day.

A full page of the function articles can be found here.